The Capabilities Model allows institutions to assess their support for computationally- and data-intensive research, to identify potential areas for improvement, and to understand how the broader community views Research Computing and Data support. The Capabilities Model was developed by a diverse group of institutions with a range of support models, in a collaboration among Internet2, CaRCC, and EDUCAUSE with support from the National Science Foundation. This Assessment Tool is designed for use by a range of roles at each institution, from front-line support through campus leadership, and is intended to be inclusive across small and large, and public and private institutions.

Want a quick summary and intro for your team? See our 1-page overview.

New online Assessment Tool, Community Data Viewer, and Engagement Guide!

We are excited to announce that our new online Assessment Tool is now available, offering a better user experience and streamlining the submission process. Read the announcement here.

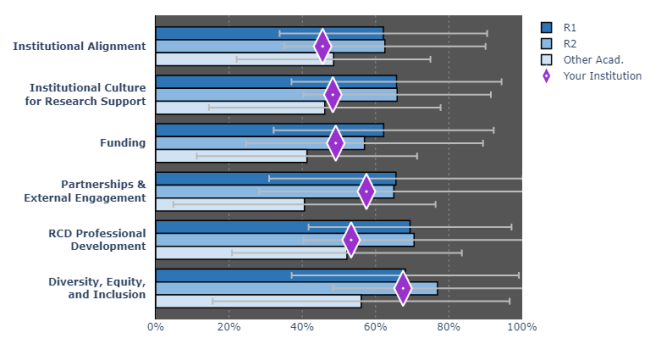

In addition, a new Community Data Viewer has also been released allowing users to explore and visualize the community dataset of assessments submitted by other institutions who have used the Capabilities Model. Users can also benchmark their institutional capabilities coverage relative to the community of contributors.

And if you’re looking to understand how to begin discussing RCD Capabilities assessment at your institution, especially if you’re a smaller campus or just starting to work toward coordinated RCD capabilities, check out our Engagement Guide.

How will my institution benefit?

The Capabilities Model can help you answer these questions:

- How well is my institution supporting computationally- and data-intensive research, and how can we get a comprehensive view of our support?

- What is my institution not thinking about or missing that the community has identified as significant?

- How can my institution (and my group) identify potential areas for improvement?

Some common uses for the Capabilities Model include:

- To identify and understand areas of strength and weakness in an institution’s support to aid in strategic planning and prioritization.

- To benchmark your institution’s support against peers – often when making an argument for increased funding to remain competitive on faculty recruitment and retention. (See the list of contributors).

- To compare local institutional approaches to a common community model (i.e., a shared vocabulary), to facilitate communications and collaboration.

Note: We are no longer accepting submissions through the former process! We encourage you to use the new portal to submit your assessments. The new assessment tool will accept submissions any time of year, and as soon as your submission has been reviewed and accepted, you can create benchmarking visualizations using the new Data Viewer tools.

Need help or have questions?

- Email capsmodel-help@carcc.org with questions or to request engagement from one of our trained facilitators.

- Subscribe to the capsmodel-discuss@carcc.org discussion list (click here to join). Hear how other institutions are completing their assessment and using it as part of strategic planning.

- Join our RCD CM Office Hours on the second Tuesdays of the month at 1pm ET

- Check out slides and recordings from our previous webinars, workshops, etc.

Just getting started with research computing and data (RCD) and looking for a simpler approach?

You’re not alone, and we’re working on tools that are geared at smaller and emerging programs. The first product of that work is our Engagement Facilitation Guide for Smaller and Emerging RCD Programs. The next steps are new ways to use the tools, coming soon: An Essentials version that allows smaller and emerging programs to focus on a smaller set of key capabilities, and a Chart-Your-Own-Journey version that lets institutions design a custom assessment to focus on just the areas they want. These features are currently in development, with a planned release in late April 2025.

Want to get involved?

See the working group page to learn about ongoing development and support work.

This work has been supported in part by an RCN grant from the National Science Foundation (OAC-1620695, PI: Alex Feltus, “RCN: Advancing Research and Education through a national network of campus research computing infrastructures – The CaRC Consortium”), and by an NSF Cyberinfrastructure Centers of Excellence (CI CoE) pilot award (OAC-2100003, PI Dana Brunson, “Advancing Research Computing and Data: Strategic Tools, Practices, and Professional Development”).